12 Minutes

Swift Machine Learning: Using Apple Core ML

Fix Bugs Faster! Log Collection Made Easy

A sub-discipline of artificial intelligence (AI), machine learning (ML) focuses on the development of algorithms to build systems capable of learning from, and making decisions based on, data. In iOS development, ML allows us to create applications that can identify patterns and make predictions, adapting a user’s experience by learning from their behaviour. It’s this capability that drives functionality such as the content recommendations, automated workflows and predictive suggestions that have become so familiar to users of modern apps from ecommerce to email.

This functionality has become so ubiquitous that it’s now conspicuous by its absence, and applications that do not offer tailor-made experiences driven by ML can feel hopelessly outdated. User expectations are higher than ever and to stay relevant and competitive competition it’s crucial we embrace these advanced tools and technologies.

Today we’re going to take a look at the Core ML framework in iOS SDK to understand how it is optimized for performance on Apple devices. We’ll be considering how we can leverage the dedicated hardware to execute machine learning models and demonstrating how we can use a combination of hardware and framework to execute complex computations in real-time. We’ll be covering:

- Machine learning model flow

- Apple iOS SDK machine learning frameworks

- iOS app development using Core ML

- Advanced ML techniques and best practices

- Real-world applications and case studies

Ready? Let’s dive in!

Table of Contents

Machine learning model flow

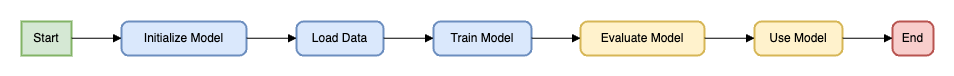

The machine learning model flow involves several stages from initialization and data collection to training, evaluation and deployment.

Below is a basic ML workflow:

Now let’s take a look at the ML frameworks available in iOS SDK.

Apple iOS SDK machine learning frameworks

Apple iOS SDK has many tools, frameworks and libraries for to support developers in harnessing machine learning capabilities such as image recognition and natural language analysis to build smarter applications. These frameworks can be easily used in Swift, making them ideal for integrating machine learning into iPhone and iPad apps. Let’s check out these frameworks and what they can offer.

Core ML framework

Core ML framework acts as the backbone when implementing machine learning in iOS apps and can be easily integrated to use existing trained models or to train new models based on data captured.

Key strengths include:

- Designed for iOS: Core ML framework is tuned for iOS, making it ideal for executing machine learning models in iOS apps.

- Compatibility and support: Support for popular systems and formats such as TensorFlow and PyTorch means integrating these models into Core ML tools is straightforward.

- Security and data protection: Provides the capability to infer models locally on the device itself, ensuring the safety and privacy of user data and robust overall security.

Use cases could be:

- Images: Identifying and categorizing images.

- Objects: Detecting and tracing objects.

- Natural language: Understanding natural language.

- Prediction: Forecasting future trends through data analytics.

Vision framework

Apple’s Vision framework offers stable APIs to perform vision related tasks such as image analysis, object, face and text detection, as well as bar code reading.

Key strengths include:

- Reduced complexity: Easy to use APIs mean there is no need to implement complex logics and algorithms.

- Responsiveness: Works in tandem with device hardware to accelerate task processing to real-time, increasing responsiveness and overall user experience.

- Compatibility: Can easily be integrated with Core ML to combine computer vision algorithms with custom machine learning models.

Use cases could be:

- Faces: Supports face detection.

- Objects: Enables object Recognition.

- Codes: Supports Barcode and QR code scanning in real time.

- Text: Helps with text detection and OCR.

- Images: Helps with image classifications.

Create ML

Create ML is a great tool for training the custom machine learning models on macOS. It works without the need of a detailed programming knowledge of the model trainer.

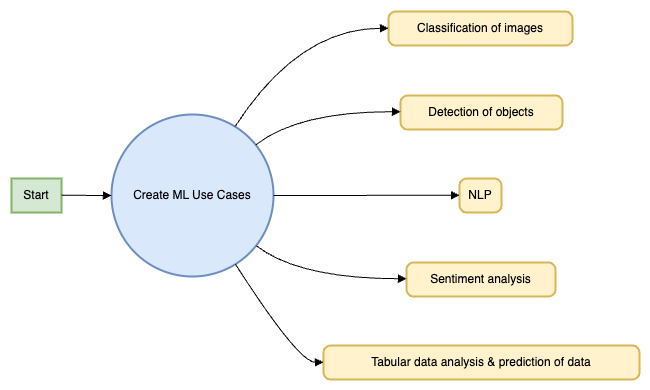

Below is a Create ML workflow:

Key strengths include:

- Ease of use: A GUI based tool that makes it easy to create and train machine learning models using labeled data to train the models.

- Compatibility: Can be easily integrated with Xcode, which helps to export the trained models to Core ML to be used in the iOS apps.

- Integration: Create ML is a quick integration tool and provides instant feedback on model performance which can be used to create the reiteration.

Use cases could be:

- Images: Identifying and categorizing images.

- Objects: Detecting and tracing objects.

- Natural language: Understanding natural language.

- Sentiment: Determining the sentiment expressed in a piece of text.

- Data analysis and prediction: Forecasting future trends through tabular data analysis

Natural Language framework

Apple’s Natural Language framework is made up of APIs that can perform natural language processing tasks, such as tokenization and identification of language. The Natural Language framework has a range of NLP tools for analysing and processing textual data.

Key strengths include:

- Multiple language support: Can support multiple languages, detecting the language of text to support globally distributed apps.

- Integration with Core ML: Can be easily integrated with Core ML so NLP algorithms and custom machine learning models can be deployed together.

Use cases could be:

- Language Identification: Automatically identifying the language of a given text.

- Named Entity Recognition (NER): Identifying and classifying proper names in text (people, organizations, locations).

- Sentiment Analysis: Determining the sentiment expressed in a piece of text.

Fantastic! Now we’re familiar with the ML frameworks we can work with, let’s take a look at how we might integrate them when developing an iOS app.

iOS app development using Core ML

By integrating machine learning models we can make our apps more responsive and intuitive, delivering users a personalised experience based on their own habits and preferences. To demonstrate this we’re going to use an example of integrating image classification model in a photo editing iOS app.

Let’s get started…

Choose the right model

It’s essential to choose the appropriate Core ML model for the functionality we need. In our example of a photo editing app, we need to categorize images based on their nature, so we’ll select a pre-trained model such as PyTorch or TensorFlow, then convert it into the Core ML framework so we can easily integrate it.

Convert the model to Core ML

Now we’ve selected the right model, we’ll need to convert it to a Core ML format using the standard library conversion tools. Once converted, we’ll need to save the model to .mlmodel file format, this will keep the model architecture and parameters in the Xcode project.

Integrate the Core ML model into our Xcode project

Next we’ll need to integrate the model into our Xcode project, and to do this we’ll need to add the .mlmodel file to the project assets. After this file has been added, Xcode will generate Swift code to provide the Core ML API to process requests like making predictions.

Pre-process the data

To improve the quality of the results, input data should be pre-processed before it’s sent to be processed by the model. An example of pre-processing would be resizing images to match the input size requirements of the model, and we can write code for these pre-processing steps.

Integrate the model into our app

To integrate the model into our app, we need to import the Core ML framework in our ViewController class, and pass the input data (e.g. an image) so the model can return a predictive result. We can allow the user to supply an image using the device’s camera, or select an existing image from the gallery.

Here’s an example of the code we could use:

// Example of using CoreML framework to classify the image.

import UIKit

import CoreML

class MLViewController: UIViewController {

let model = BGClassifierModel()

func classifyTheImage(_ image: UIImage) {

if let pixelBuffers = image.pixelBuffer(width: 200, height: 200) {

if let predictionResult = try? model.prediction(image: pixelBuffers) {

// Handle prediction results here

print(predictionResult.classLabel)

}

}

}

}

Test the model’s performance

Now our model is integrated into our app, it’s crucial to test it for both accuracy and speed, and this testing can be done using test data or users’ real-time generated data. Test results can then be analysed and debugging can take place.

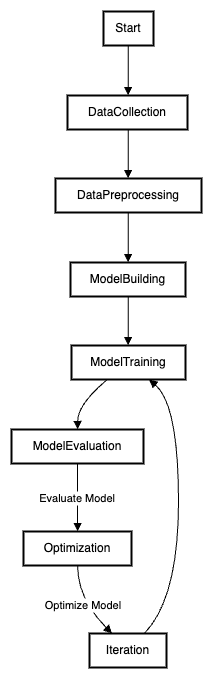

Optimise and iterate the model

It’s important we keep optimizing our model based on data, errors and accuracy parameters. We’ll need to use quantization and pruning techniques to keep the model size down and improve overall capability and performance.

This process is visualized in the workflow below:

As you can see, testing and reiterating is a continuous process so we can keep making improvements.

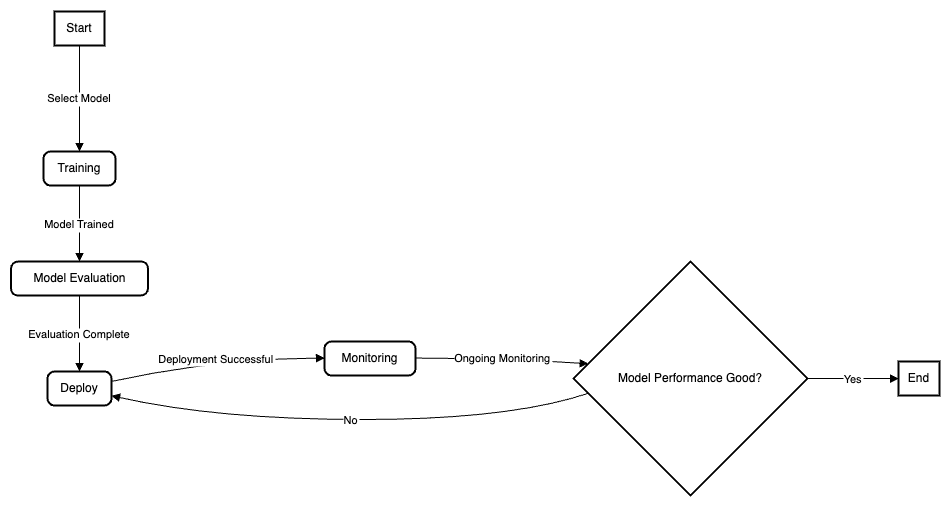

Deploy and monitor the model

The final step is to deploy the ML model integrated app on the app store, so users can use it and we can monitor its performance and accuracy in production. Monitoring results will help in improving the model performance and capability over time.

Again, this process is visualized below:

Awesome! We can now build and deploy an app using a simple ML model. Let’s take a quick look at some advanced techniques and best best practices for using ML in our iOS apps.

Advanced techniques and best practices

Machine learning integration in iOS apps requires us to select and deploy models that are accurate, responsive and, crucially, ensure the privacy and security of user data is not compromised. There are a number of approaches to achieving this, including:

Custom models

Creating custom models which provide personalized predictions based on their needs, behaviour and history adds a great deal of value. For example, and app feature to identify different medical conditions like skin condition based on an image supplied could be created by training a custom model.

Here’s an example:

// Example: Train a Custom Model with Create ML

import CreateML

import Foundation

let dataObj = try MLDataTable(contentsOf: URL(fileURLWithPath: "modical_condition_dataset.csv"))

let (trainData, testData) = dataObj.randomSplit(by: 0.8)

let classifiers = try MLImageClassifier(trainingData: trainData,

validationData: testData,

featureColumns: ["images"],

targetColumn: "labels")

let trainingMetrics = classifiers.trainingMetrics

try classifier.write(to: URL(fileURLWithPath: "MedicalConditionClassifier.mlmodel"))

Continuous improvement

It’s essential to keep improving the accuracy of our models and there are a number of techniques including data augmentation, transfer learning, and ensemble techniques which can be used for continuous improvement.

As an example, for a sentiment analysis feature we could implement the transfer learning technique to fine tune the pre-trained model on any specific domain, as shown below:

// Example: Improve and fine tune the pre trained Language Model

import CoreML

import NaturalLanguage

let sentimentalModel = try NLModel(mlModel: SentimentAnalysisModel().model)

sentimentalModel.update(withContentsOf: specificData)

Privacy and data security

Privacy is one of, if not the, most important aspect of using ML models in our apps and it’s vital to ensure any sensitive data is encrypted before the model is deployed locally on the device. We also need to take steps to minimise user’s personal data exposure, for example a financial app that also has machine learning capabilities to detect any possible fraud activity. In this case, when we process any user transaction locally on the device, we also need to maintain the privacy for the transaction data for that user, which could be done like this:

// Example: local Model Deployment for fraud detection

let fraudDetectModel = try FraudDetectionModel(configuration: .init())

let transactionRecord = // Any User's transaction details to process for fraud detection

let prediction = fraudDetectModel.predict(transactionRecord)

print(prediction) //print the preduction results.

Ethical considerations

It’s important to use machine learning ethically and consider factors like fairness, transparency (of what, how and why data is being processed or stored), as well as accountability in the development and deployment of a machine learning model. We should implement detection of any bias detection in the outputs or predictions returned by our models. For example, a recruitment app should ensure the ML model is returning results based on the requirements and is equitable for all users.

Here’s an example:

// Example: Bias Detection of output and Mitigation of problem

let resumeClassifiers = try ResumeScreeningModel(configuration: .init())

let resume = // User's resume data to process for selection

let prediction = resumeClassifiers.predict(resume)

print(prediction)

// It will evaluate the prediction for biases and adjust training data

Great! Before we finish, let’s take a quick look at some familiar examples of machine learning in the real-world.

Real-world applications and case studies

Here are some examples of ML models in action:

Personal assistants and chatbots

Personal assistants such as Siri use natural language processing (NLP) and ML to understand and respond to user queries, set reminders, play music, and control smart home devices.

Recommendation systems

ML algorithms analyze user behavior and preferences to recommend movies, products, or music. For example, Netflix suggests movies based on user’s viewing history and Amazon recommends products based on purchase history.

Image and speech recognition

Applications use deep learning models to recognize objects, faces, and speech. For example Apple Face ID uses facial recognition to unlock devices.

To sum up

Machine learning applications are diverse and span across various industries, improving efficiency, personalization, and decision-making processes. As ML technology continues to advance, its integration into everyday applications will become even more prevalent, driving innovation and enhancing user experiences.

In this article we discussed the machine learning integration, models and major frameworks such as CoreML, Vision and CreateML which play an important role in integrating machine learning in the iOS apps.

We demonstrated how Core ML is the fundamental framework to adopt and integrate ML in iOS and how models from TensorFlow can be converted to CoreML and used directly in our apps.

We looked at the process for building ML capabilities into an application, as well as how to do this responsibly by considering data security and other ethical issues.

Hopefully you now feel confident to embrace iOS machine learning capabilities and unlock the new horizons of innovation and creativity.

Expect The Unexpected!

Debug Faster With Bugfender